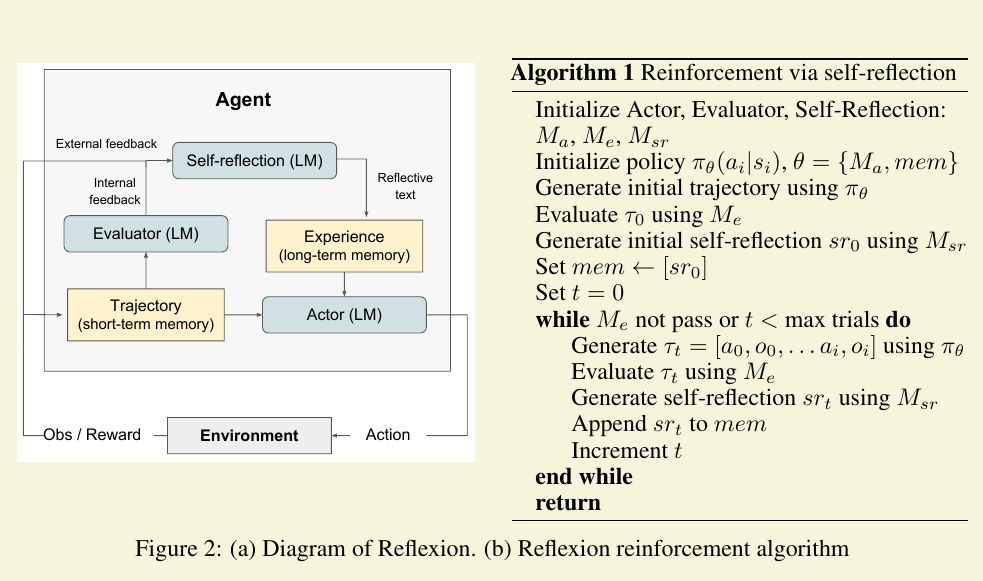

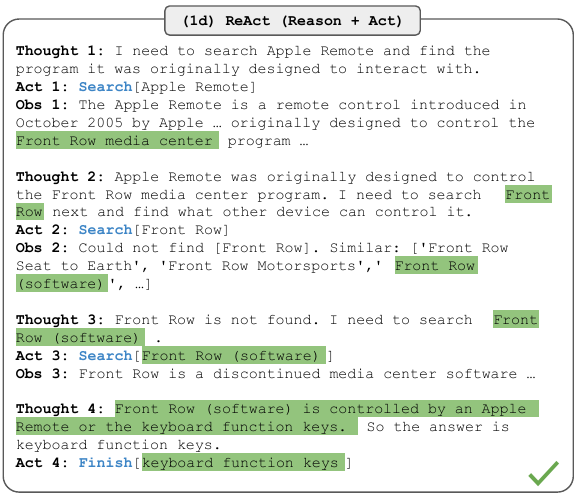

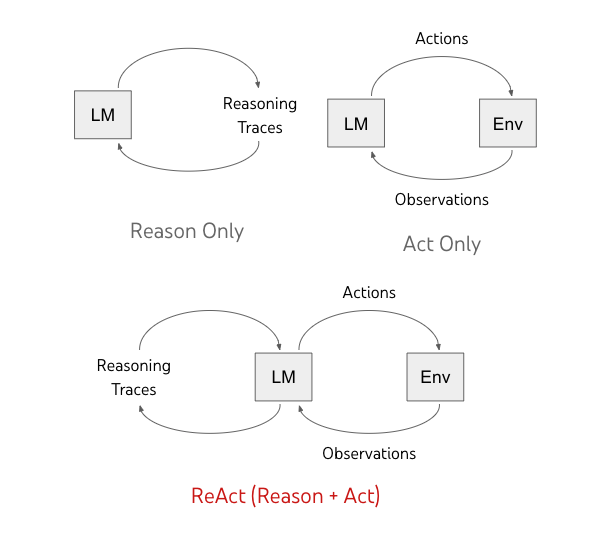

Reflexion + ReAct with Gemini 1.5

Agentic Patterns

Reflection

Tool Use

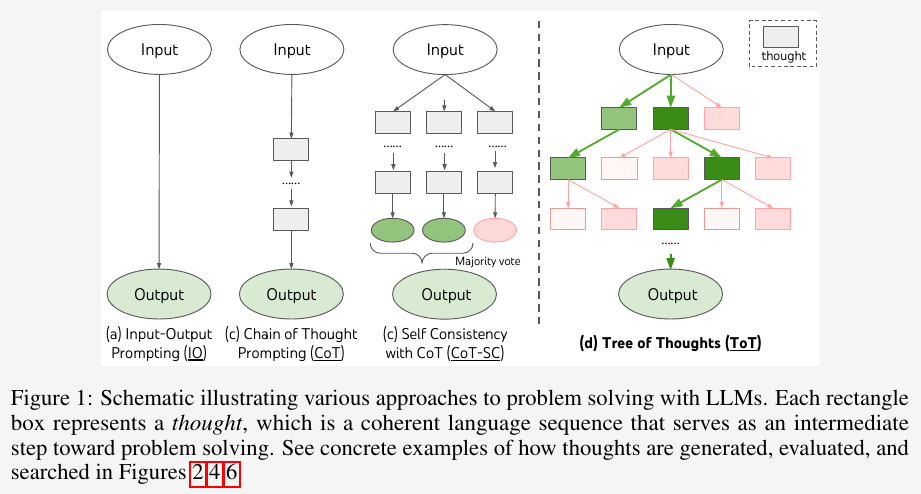

Prompt Engineering

Paper Reading

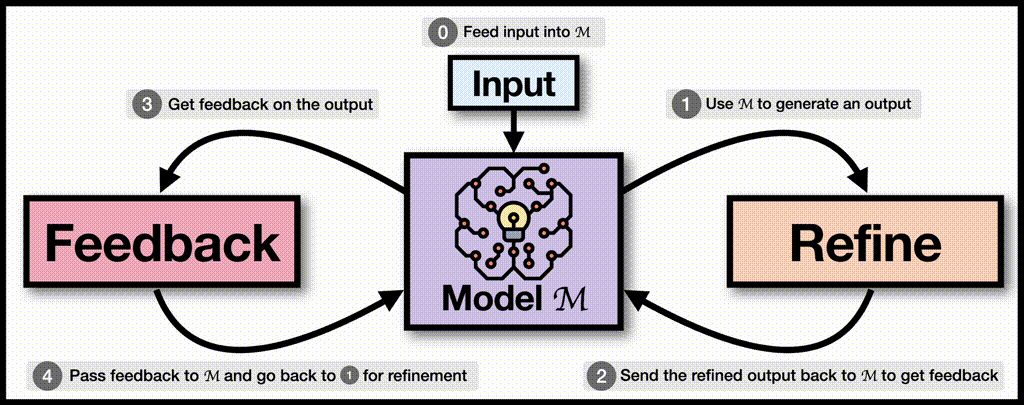

Self-refine with Gemini 1.5

Agentic Patterns

Reflection

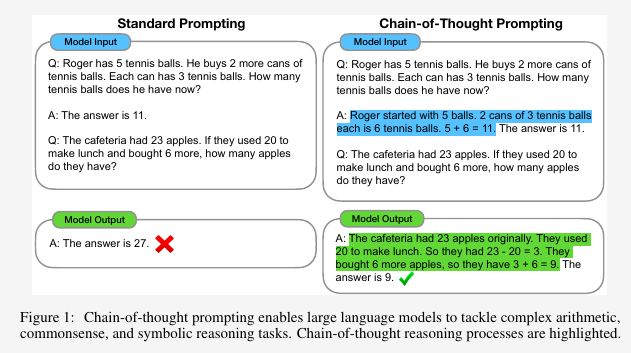

Prompt Engineering

Paper Reading

A function calling agent for document QA

Agentic Patterns

Function calling

Tool Use

LlamaIndex

Mistral

Function calling with Mixtral-8x22B-Instruct-v0.1

Agentic Patterns

Function calling

Tool Use

Tabular data

Mistral

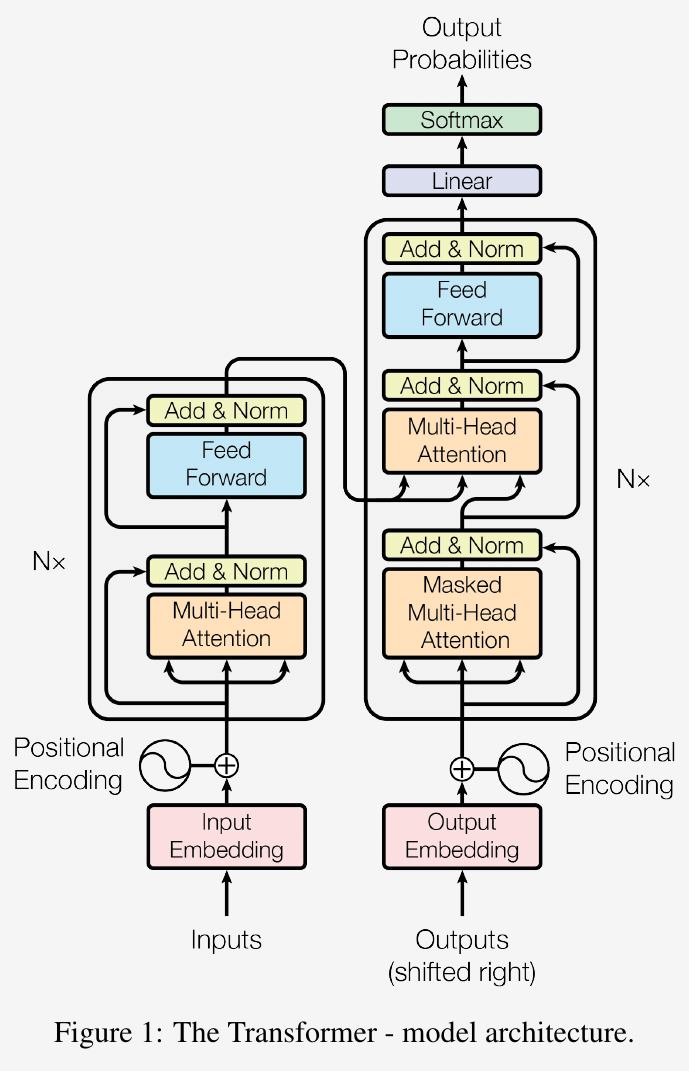

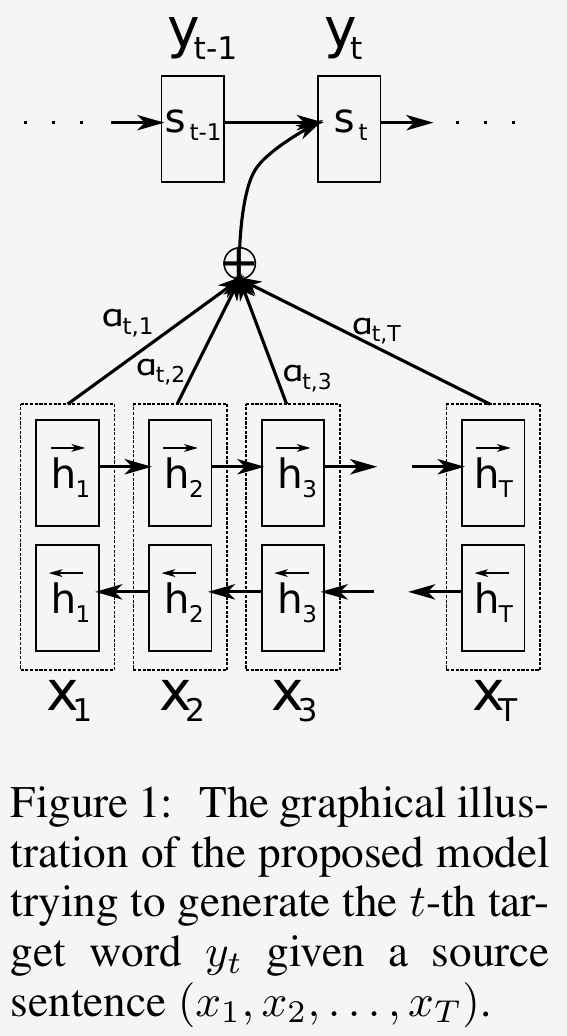

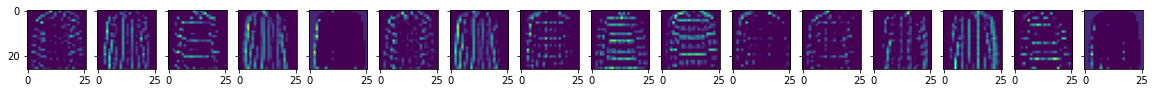

Implementing the original Transformer model in PyTorch

Paper Reading

Attention Mechanism

Transformer

PyTorch

No matching items